EnvoyProxy 1: Reverse Proxy

Table of Contents

Abstract

A proxy server is a server application that acts as an intermediary between a client requesting a resource and a server providing a resource, which typically exists at the edge of the network of the client organization. A reverse proxy sits at the edge of the network of the resource provider’s organization, and is used to provide a unified interface to clients, but allow the resource provider’s organization to load balance, rate limit, perform TLS encryption, compression, and route traffic to different underlying services. EnvoyProxy is a high performance and flexible reverse proxy software used in numerous contexts, and service mesh orchestration layers like Istio, are built on top of EnvoyProxy and Kubernetes. Unfortunately, the power of EnvoyProxy comes at a considerable cost of high complexity.

This blog entry attempts to unravel that complexity and provide a practical demonstration of how to get a working EnvoyProxy server running. Subsequent blog entries will expand upon this basic knowledge to add additional functionality such as local rate limiting, TLS termination, load balancing, and more.

Introduction

What is a Proxy?

A proxy server is a dedicated software server that receives network requests from internal clients, makes the request to an external service, and returns the result to the originally requesting internal client.

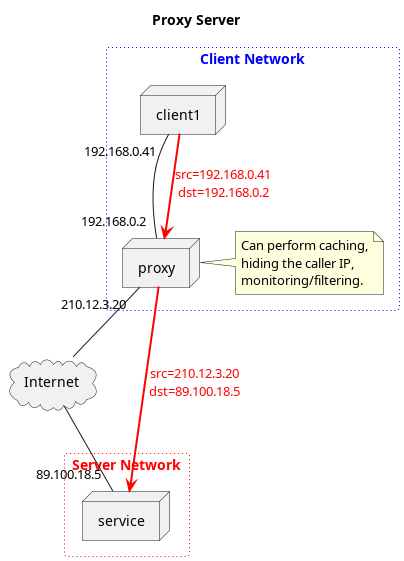

In Image 1, we can see that the proxy server lives within the network of some organization, such as a university, a corporation, or something else. Suppose client1 makes a request to service in the form of a web request. For example, they browse to http://www.example.com. Rather than sending this request directly from their address at 192.168.0.41 to the service at 89.100.18.5, they instead send the request to the proxy at 192.168.0.2. The proxy then receives this request, and sends a request to the service using its own address as the source, i.e. 210.12.3.20. When proxy receives a response, it sends this response back to the original requester, client1.

Proxies have a few effects.

- The service does not know which client sent the original request, they always see the proxy as the source address of the request.

- The proxy can provide additional functions, such as caching common requests to external resources.

Proxies are typically used in front of school networks to perform content filtering, e.g. blocking requests for explicit material. They can also be used to conceal the origin or destination requests from eavesdropping parties.

Related concepts to proxies and how they are different:

- Network Router: A router sits at the edge of the client’s network very much like a proxy. The primary difference is that a router is typically a hardware device with very limited functionality. Their primary function is to perform network address translation. When doing so, network packets are only modified at the IP/TCP level, and no inspection of their contents occurs. In most practical cases, a router is sufficient and a proxy is not needed.

What is a Reverse Proxy?

A reverse proxy is similar to a normal proxy in the sense that it receives connections from clients, makes requests to servers, and returns the result to the client. The difference, however, is that the reverse proxy lives on the server network; it receives requests from outside the organization and directs them inward.

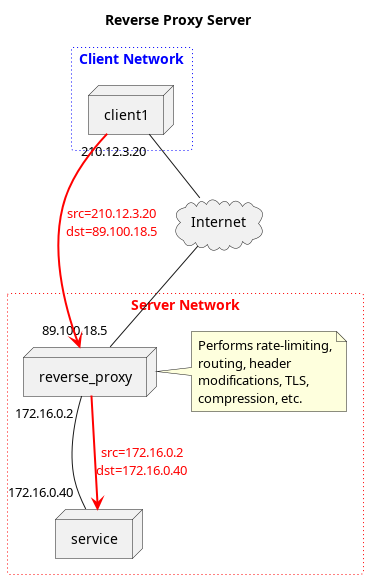

In Image 2, the request still originates from the client to the service, but the proxy now exists on the server network rather than the client network. The client makes a request to the publicly visible IP address at 89.100.18.5, but beyond this, it receives no information about the underlying service that is performing the request. The reve

rse proxy makes the request to the service, on behalf of the client, and returns the response. Many services online are built to handle large numbers of users and very complicated functionality, and a reverse proxy is positioned in such a way that common logic between multiple services can be performed in a consistent manner.

Reverse proxies can be used for several possible reasons:

- Load Balancing: If a service is scaled up with multiple servers to serve requests, the reverse proxy can disperse requests fairly between these servers.

- Request Routing: Especially in larger, more complicated, services, what appears to be a single web service is actually powered by many groups of servers, each specialized in handling only certain calls. In such cases, the reverse proxy evaluates the HTTP requests and, depending on their URL, sends the requests to the appropriate service in the service provider network.

- Rate Limiting: Whether it is to protect services from being overloaded or to limit access to services based on customer service level agreements, reverse proxies can implement limit the frequency at which a request can be made.

- Compression: Reverse proxies can implement request compression and decompression in a common manner, rather that having to re-implement it for every service.

- TLS Termination: By handling TLS encryption, decryption, and authentication in a single place, services in the company network do not have to independently implement this.

Related concepts to reverse proxies and how they are different.

- Network Router: A router can forward incoming requests to different incoming network ports to different internal hosts, but it cannot perform advanced functionality, rate limiting, load balancing, etc.

- Load Balancer: A load balancer is more sophisticated than a router and there are both hardware and cloud native solutions, such as AWS Elastic Load Balancing. Load balancers can often perform HTTP-level functions, such as routing traffic based on the URL path. Other functionality, such as rate limiting, is often missing.

What is EnvoyProxy?

EnvoyProxy is a reverse proxy software that can be deployed like a traditional edge-of-network reverse proxy. Additionally, it is built with orchestration systems like Kubernetes in mind. EnvoyProxy has a very complex model of network messages which can be manipulated via static configuration files, dynamic configurations loaded at runtime, and runtime interaction with other processes via gRPC requests.

The root of its configuration, whether configured via a file or at runtime, is a Protocol Buffer message called Bootstrap. Even if you are unfamiliar with Protocol Buffer messages, the important thing to keep in mind is that they are a typed binary serialization format that has a standard conversion to both JSON and YAML.

To learn to use Envoy takes a considerable investment of time and effort, especially if you are using it to replace an existing solution, such as Nginx or a load balancer. The best way to use EnvoyProxy would be in conjunction with a service mesh control plane like Istio.io. However, the purpose of this guide is to illustrate direct usage of EnvoyProxy for educational purposes.

EnvoyProxy Terminology

There’s a few terms that come up enough when using EnvoyProxy, that explaining them up-front will make one’s life much easier. They are also explained in EnvoyProxy: Life of a Request.

- Downstream: The “direction” of requests is viewed in EnvoyProxy as originating from the service server and flowing to the client that originally made the request. The client is downstream of the service that it ultimately calls. It’s not intuitive, but just imagine a river flowing from Server to Client.

- Upstream: This is the direction towards the service server that will answer a network request.

- Listener: An Envoy module that binds to a network IP and port, and can accept incoming network connections.

- Filter: A small Envoy module that takes a request as input, can modify that request, e.g. by adding a routing destination or adding a header, and can either let the request flow to another filter, reject the request, or take a terminal action such as sending the request to another network destination. Furthermore, while processing a request, a filter can add information that subsequent filters can read.

- Filter Chain: Contained by a Listener, a filter chain is an ordered list of filters which should be executed on a request, one after the other.

- Cluster: A logical service with endpoints that envoy can forward requests to. For example, a cluster may represent a set of servers (endpoints) who can process HTTP requests on port 80, and Envoy may perform logic, such as load balancing, to distribute individual requests to endpoints in the cluster.

EnvoyProxy High-Level Architecture

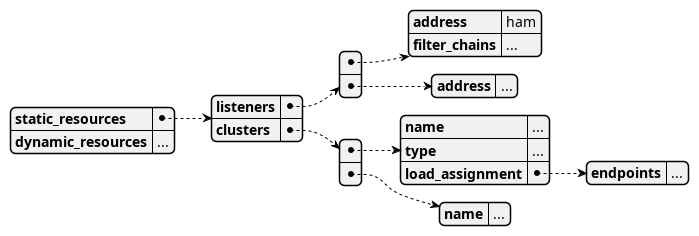

Armed with this basic knowledge of Envoy terminology, it is a little bit easier to understand the structure of how Envoy is built, which is essential if you want to understand its very complex configuration files.

Source: https://www.envoyproxy.io/docs/envoy/latest/intro/life_of_a_request#high-level-architecture

Requests come in from clients (downstream) and they connect to an IP and port that was bound to by an Envoy Listener. Envoy Listeners contain a Filter Chain, which is an ordered list of Filters that perform incremental changes on that request. One of those filters is a very important one called the HTTP Router. The HTTP Router filter is special because it terminates a filter chain and actually tells Envoy to forward the request to a service (an upstream cluster).

Once a request has been forwarded by the HTTP Router Filter to a Cluster, that Cluster will contain additional information about the endpoint(s) to forward to as well as any load-balancing strategy needed to choose among several endpoints.

Configuring EnvoyProxy

Whew, that is a lot of introduction, but as you can see, when you are using Envoy, there is a fairly large number of technologies and concepts that you must be familiar with before you can make serious progress. While this means that there is a bit more homework to do up front, the payoff is being able to use a tool that is extremely flexible and can provide a large array of features. When you need certain features, there are often not very many options. When it comes to rate-limiting, you choices are either Envoy, Apache HTTP Server, Nginx, or implementing it ad-hoc in each service that needs it.

EnvoyProxy Static Configuration Files: Outer Structure

As mentioned in the introduction, EnvoyProxy uses Protocol Buffer messages for most of its internal data structures that can be configured. The root object type is called Bootstrap, which should serve as the starting point for exploring the configuration options that exist (and there are a LOT).

For this exercise, we will be looking at an envoy configuration file called envoy-basic.yaml. At first, this will be a bit overwhelming, but after the explanations further below, it will become a useful resource.

A few of the more important configuration options are presented in the skeletal configuration file below. The formats are very complicated, so it will be introduced a small piece at a time.

The structure looks very similar to what was discussed under Envoy Terminology. The first unusual thing to notice are the fields “static_resources” and “dynamic_resources”. Under “static_resources”, one can find configuration settings that are loaded at the time the EnvoyProxy server starts. Under “dynamic_resources”, one can find configurations needed to retrieve configuration settings dynamically at runtime.

In YAML format, the file looks like this so far:

# Resources loaded at boot, rather than dynamically via APIs.

# https://www.envoyproxy.io/docs/envoy/latest/api-v3/config/bootstrap/v3/bootstrap.proto#envoy-v3-api-msg-config-bootstrap-v3-bootstrap-staticresources

static_resources:

# A listener wraps an address to bind to and filters to run on messages on that address.

# https://www.envoyproxy.io/docs/envoy/latest/api-v3/config/listener/v3/listener.proto#envoy-v3-api-msg-config-listener-v3-listener

listeners:

# The address of an interface to bind to. Interfaces can be sockets, pipes, or internal addresses.

# https://www.envoyproxy.io/docs/envoy/latest/api-v3/config/core/v3/address.proto#envoy-v3-api-msg-config-core-v3-address

- address: ...

# Filter chains wrap several related configurations, e.g. match criteria, TLS context, filters, etc.

# https://www.envoyproxy.io/docs/envoy/latest/api-v3/config/listener/v3/listener_components.proto#envoy-v3-api-msg-config-listener-v3-filterchain

filter_chains: ...

# An ordered list of filters to apply to connections.

# https://www.envoyproxy.io/docs/envoy/latest/api-v3/config/listener/v3/listener_components.proto#envoy-v3-api-msg-config-listener-v3-filter

- filters: ...

# Configurations for logically similar upstream hosts, called clusters, that Envoy connects to.

# https://www.envoyproxy.io/docs/envoy/latest/api-v3/config/cluster/v3/cluster.proto#envoy-v3-api-msg-config-cluster-v3-cluster

clusters:

- name: service_envoyproxy_io

# The cluster type, in this case, discover the target via a DNS lookup.

# https://www.envoyproxy.io/docs/envoy/latest/api-v3/config/cluster/v3/cluster.proto#envoy-v3-api-enum-config-cluster-v3-cluster-discoverytype

type: LOGICAL_DNS

# For endpoints that are part of the cluster, determine how requests are distributed.

# https://www.envoyproxy.io/docs/envoy/latest/api-v3/config/endpoint/v3/endpoint.proto#envoy-v3-api-msg-config-endpoint-v3-clusterloadassignment

load_assignment:

cluster_name: service_envoyproxy_io

# A list of endpoints that belong to this cluster.

# https://www.envoyproxy.io/docs/envoy/latest/api-v3/config/endpoint/v3/endpoint_components.proto#envoy-v3-api-msg-config-endpoint-v3-localitylbendpoints

endpoints: ...

Code language: YAML (yaml)Beyond this, one can see that there are Envoy Listeners and Envoy Clusters. The listeners have an address that they bind to and a list of filters in filter_chains to run on these messages. Eventually a router filter that sends a message is called, which terminates the filter chain. When this message is routed, it will be sent to one of the clusters. And just as is described in the terminology, an Envoy Cluster represents one or more endpoints (hosts) that make up a logical service, with logic to distribute load among them being described under load_assignment.

EnvoyProxy Static Configuration Files: Clusters

So far so good, we have an outer skeleton of the configuration, complete with all the pieces that we know must exist from the Envoy architecture, including: listeners, filters, clusters, and endpoints. Let’s focus on creating a concrete cluster that we can test with.

When looking at the Cluster documentation, we see that there is a dizzying number of options. We want something that we can set up easily to begin testing with, that doesn’t require another complicated setup. Thus we will create a cluster that points to an already existing resource. The site https://www.envoyproxy.io is already being used as a reference to documentation, so we can use it as a resource in our example.

static_resources:

# Configurations for logically similar upstream hosts, called clusters, that Envoy connects to.

# https://www.envoyproxy.io/docs/envoy/latest/api-v3/config/cluster/v3/cluster.proto#envoy-v3-api-msg-config-cluster-v3-cluster

clusters:

-

# Every cluster must have a unique name, which we use for routing.

name: service_envoyproxy_io

# The LOGICAL_DNS type is suitable for resources that use DNS load-balancing.

type: LOGICAL_DNS

# The time to wait for a connection before an error is returned.

connect_timeout: 500s

# A load balancing policy for endpoints/hosts in the cluster.

# https://www.envoyproxy.io/docs/envoy/latest/api-v3/config/endpoint/v3/endpoint.proto#envoy-v3-api-msg-config-endpoint-v3-clusterloadassignment

load_assignment:

# The cluster name is repeated here.

cluster_name: service_envoyproxy_io

# The list of endpoints and the locality to load-balance to.

# https://www.envoyproxy.io/docs/envoy/latest/api-v3/config/endpoint/v3/endpoint_components.proto#envoy-v3-api-msg-config-endpoint-v3-localitylbendpoints

endpoints:

# If you have multiple zones or regions, specify them here.

# locality: ...

# The endpoints associated with the locality.

# https://www.envoyproxy.io/docs/envoy/latest/api-v3/config/endpoint/v3/endpoint_components.proto#envoy-v3-api-msg-config-endpoint-v3-lbendpoint

lb_endpoints:

-

# An actual endpoint (which requires more nesting).

# https://www.envoyproxy.io/docs/envoy/latest/api-v3/config/endpoint/v3/endpoint_components.proto#envoy-v3-api-msg-config-endpoint-v3-endpoint

endpoint:

# More nesting until we get to an actual host address.

# https://www.envoyproxy.io/docs/envoy/latest/api-v3/config/core/v3/address.proto#envoy-v3-api-msg-config-core-v3-address

address:

# There are many address types, we want an Internet socket.

socket_address:

# Glad you made it this far, we finally entered an address.

address: www.envoyproxy.io

port_value: 443

# (Optional) If uneven load balancing is desired, weights can control it.

load_balancing_weight: 1

# Enable TLS so we can communicate with an HTTPS endpoint.

# https://www.envoyproxy.io/docs/envoy/latest/api-v3/config/core/v3/base.proto#envoy-v3-api-msg-config-core-v3-transportsocket

transport_socket:

name: envoy.transport_sockets.tls

typed_config:

# https://www.envoyproxy.io/docs/envoy/latest/api-v3/extensions/transport_sockets/tls/v3/tls.proto

"@type": type.googleapis.com/envoy.extensions.transport_sockets.tls.v3.UpstreamTlsContext

# Server Name Indication, the server being contacted in step 1 of the TLS handshake.

sni: www.envoyproxy.io

Code language: PHP (php)Now, at this point you might be thinking, “What is this mess? I just wanted to point to www.envoyproxy.io.” And yes, you are right, it is a mess. It is hard to understand. The documentation is scattered and confusing to follow. However, EnvoyProxy runs quite efficiently, and it has an extremely large set of features that it can implement, including Rate-Limiting.

Ok, we finally have a cluster defined, we have a target where Envoy can send traffic. However, this configuration is not yet useful, because we have no place to receive traffic yet.

Nevertheless, if you are following along and have installed Envoy, we can try out what we have so far. If your configuration is saved in the file “envoy-ratelimit.yaml”, then we can syntactically validate it with the command:

$ envoy -c envoy-ratelimit.yaml --mode validate

...

configuration 'envoy-ratelimit.yaml' OK

Code language: Bash (bash)EnvoyProxy Static Configuration Files: HTTP Filters

Now that we have an actual place to send traffic, we need listeners to receive incoming requests and filters which say what should be done with those messages. To start with, let’s configure Envoy to listen on port 8080 using standard HTTP, and then route that traffic to our cluster at https://envoyproxy.io.

Like the configuration of clusters, this configuration is quite verbose. Before we dive into the YAML, briefly review a summary of the configurations being added:

- listener : This object binds to a port to receive network connections.

- address: We bind to network address “0.0.0.0:8080”

- filter_chains: You can have different filter chains that apply depending on the connection type, etc.

- filters: We need filters to process the traffic, at a minimum to route it.

- HTTP Connection Manager Filter: We add this filter in order to decode a message of bytes into an HTTP message and set routing rules.

- domains: If we want, we can have separate logic and routing per domain, e.g.

app.example.com. - routes: We can send different kinds of requests to different clusters. For now, we send everything (path of “/”) to our cluster.

- http_filters: We can add additional filters that are HTTP-aware to run.

- HTTP Router Filter: We add a terminal filter that sends the message along to its destination.

- domains: If we want, we can have separate logic and routing per domain, e.g.

- HTTP Connection Manager Filter: We add this filter in order to decode a message of bytes into an HTTP message and set routing rules.

- filters: We need filters to process the traffic, at a minimum to route it.

And finally the changes to the YAML file.

static_resources:

# The configuration of clusters is discussed above.

clusters: ...

# A listener wraps an address to bind to and filters to run on messages on that address.

# https://www.envoyproxy.io/docs/envoy/latest/api-v3/config/listener/v3/listener.proto#envoy-v3-api-msg-config-listener-v3-listener

listeners:

# The address of an interface to bind to. Interfaces can be sockets, pipes, or internal addresses.

# https://www.envoyproxy.io/docs/envoy/latest/api-v3/config/core/v3/address.proto#envoy-v3-api-msg-config-core-v3-address

- address:

# This address is for a network socket, with an IP and a port.

# https://www.envoyproxy.io/docs/envoy/latest/api-v3/config/core/v3/address.proto#envoy-v3-api-msg-config-core-v3-socketaddress

socket_address:

# The value 0.0.0.0 indicates that all interfaces will be bound to.

address: 0.0.0.0

# The IP port number to bind to.

port_value: 8080

# Filter chains wrap several related configurations, e.g. match criteria, TLS context, filters, etc.

# https://www.envoyproxy.io/docs/envoy/latest/api-v3/config/listener/v3/listener_components.proto#envoy-v3-api-msg-config-listener-v3-filterchain

filter_chains:

-

# If desired, a set of filters can be applied conditionally.

# filter_chain_match: ...

# An ordered list of filters to apply to connections.

# https://www.envoyproxy.io/docs/envoy/latest/api-v3/config/listener/v3/listener_components.proto#envoy-v3-api-msg-config-listener-v3-filter

filters:

- name: envoy.filters.network.http_connection_manager

# A generic configuration whose fields vary with its "@type".

typed_config:

# The HttpConnectionManager filter converts raw data into HTTP messages, logging,

# tracing, header manipulation, routing, and statistics.

# https://www.envoyproxy.io/docs/envoy/latest/intro/arch_overview/http/http_connection_management#arch-overview-http-conn-man

# https://www.envoyproxy.io/docs/envoy/latest/api-v3/extensions/filters/network/http_connection_manager/v3/http_connection_manager.proto#extension-envoy-filters-network-http-connection-manager

"@type": type.googleapis.com/envoy.extensions.filters.network.http_connection_manager.v3.HttpConnectionManager

# The human readable prefix used when emitting statistics.

stat_prefix: ingress_http

# The static routing table used by this filter. Individual routes may also add "rate

# limit descriptors", essentially tags, to requests which may be referenced in the

# "http_filters" config.

# https://www.envoyproxy.io/docs/envoy/latest/api-v3/config/route/v3/route.proto#envoy-v3-api-msg-config-route-v3-routeconfiguration

route_config:

name: local_route

# An array of virtual hosts which will compose the routing table.

# https://www.envoyproxy.io/docs/envoy/latest/api-v3/config/route/v3/route_components.proto#envoy-v3-api-msg-config-route-v3-virtualhost

virtual_hosts:

- name: backend

# A list of domains, e.g. *.foo.com, that will match this virtual host.

domains:

- "*"

# A list of routes to match against requests, the first one that matches will be used.

# https://www.envoyproxy.io/docs/envoy/latest/api-v3/config/route/v3/route_components.proto#envoy-v3-api-msg-config-route-v3-route

routes:

-

# The conditions that a request must satisfy to follow this route.

# https://www.envoyproxy.io/docs/envoy/latest/api-v3/config/route/v3/route_components.proto#envoy-v3-api-msg-config-route-v3-routematch

match:

# A match against the beginning of the :path pseudo-header.

prefix: "/"

# The routing action to take if the request matches the conditions.

# https://www.envoyproxy.io/docs/envoy/latest/api-v3/config/route/v3/route_components.proto#envoy-v3-api-msg-config-route-v3-routeaction

route:

# During forwarding, set the request host value.

host_rewrite_literal: www.envoyproxy.io

# Send the request to the cluster defined in this file.

cluster: service_envoyproxy_io

# A chain of filters to run on the message which can assume that the

# message is HTTP and can access data set by the HTTP Connection Manager filter.

# https://www.envoyproxy.io/docs/envoy/latest/api-v3/extensions/filters/network/http_connection_manager/v3/http_connection_manager.proto#envoy-v3-api-msg-extensions-filters-network-http-connection-manager-v3-httpfilter

http_filters:

# The router filter performs HTTP forwarding with optional logic for retries, statistics, etc.

# https://www.envoyproxy.io/docs/envoy/latest/configuration/http/http_filters/router_filter#config-http-filters-router

- name: envoy.filters.http.router

typed_config:

"@type": type.googleapis.com/envoy.extensions.filters.http.router.v3.Router

Code language: YAML (yaml)When understanding Envoy configuration files, there’s a few patterns that are commonly used. Sometimes an object that is being configured can be one of several different types. For example, there is a plethora of filters that are available. In such cases, there is always a field named @type, which will correspond to the precise name of the object being described. The value, such as type.googleapis.com/envoy.extensions.filters.http.router.v3.Router, is standardized, and thus it can be used to help find documentation when working with an Envoy configuration that is not documented.

Another common pattern is to see what appears to be excessive layers of nesting. E.g. seeing a configuration like endpoint => address => socket_address => address => .... At first glance, this nesting may seem pointless and burdensome. However, the presence of nesting is usually there for 1 of 2 reasons:

- There are multiple options for the kind of data to add, e.g. a network_socket vs. a pipe.

- The outer object actually comes with additional information, like a load balancing weight.

This time, we can start up envoy and see that our requests get routed.

The file used in this example can be found in envoy-basic.yaml.

In one terminal run the command:

$ envoy -c envoy-ratelimit.yaml

Then try to browse to http://localhost:8080, and you should be redirected to the Envoy webpage.

Closing Remarks

Despite its complicated architectural model and configuration file format, Envoy is a very powerful reverse proxy system. With any luck, the core concepts that help one understand what is going on in an Envoy configuration are now clear.

In subsequent blog entries, we will explore practical use cases such as routing to multiple services, rate limiting, load balancing, TLS termination, and more.

2 Responses

Thank you for putting this together, it is pretty helpful. The article made the Envoy easy to grasp for me.

I appreciate the feedback and I’m glad the article was of use to you!